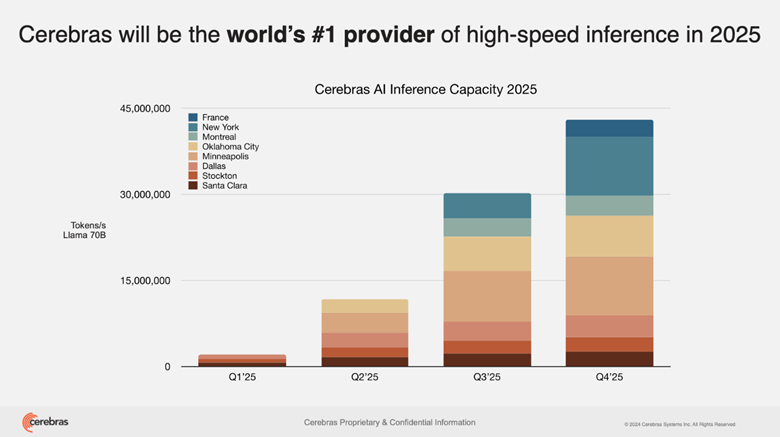

Cerebras AI is expanding its infrastructure with six new data centers across North America and Europe, offering faster, cheaper, and more accessible AI inference services to handle the demand for efficient AI processing as models become more complex.

The buildout is a strategic reaction for streamlined AI processing as businesses and developers seek more scalable solutions, given AI models’ increasingly complex nature, fused with the need for high-speed inference services.

AI chipmaker Cerebras not only strengthens AI infrastructure but also underscores a broader industry trend – prioritizing efficiency and accessibility in AI deployment. As the company sets up new data centers, the focus shifts to how this expansion will transform the AI ecosystem and drive widespread adoption.

Democratizing AI Infrastructure

The new Cerebras AI data centers will be located in Minneapolis, Dallas, Oklahoma City, New York, Montreal, and France, with the expansion expected to increase Cerebras AI chips inference capacity twenty-fold, exceeding 40 million tokens per second. As an aside, 85% of this capability will be in the United States, emphasizing the company’s focus on local AI infrastructure expansion.

Image Credit: Cerebras

Besides infrastructure development, Cerebras AI company has also forged certain important collaborations with the focus of facilitating access of AI:

- Hugging Face Integration: Cerebras AI has integrated its services into the Hugging Face platform, providing over five million developers with seamless access to high-speed AI inference. This collaboration allows developers to utilize popular models at speeds exceeding 2,000 tokens per second, significantly faster than leading GPU solutions.

- AlphaSense Collaboration: Partnering with AlphaSense, a market intelligence platform, Cerebras aims to deliver AI-driven insights up to ten times faster than previous capabilities. This enhancement is expected to benefit a substantial portion of Fortune 100 companies that rely on AlphaSense for market data.

Accessibility Fused with Affordability

Recent announcements on how Cerebras makes AI chips demonstrate a strategic intent to make high-performance AI chips more affordable and accessible, and when Cerebras launches AI inference tool to challenge Nvidia, it’s basically reinforcing its commitment to reducing AI inference costs.

With its expanding data center footprint and collaborations with platforms like Hugging Face and AlphaSense, the company is working to lower cost barriers, making AI more accessible across a wider range of industries.

Cerebras challenging the Nvidia AI dominance has become a real deal. These developments put the company in the lead in democratizing Cerebras AI technology. with a focus on not just speed but also on the importance of accessibility and affordability in the high-speed world of AI.

Inside Telecom provides you with an extensive list of content covering all aspects of the tech industry. Keep an eye on our Intelligent Tech sections to stay informed and up-to-date with our daily articles.