Cloud computing is going beyond Earth’s limits, as servers in space and alternative data center designs promise cheaper energy, massive AI scale, and new architectures that challenge the future of infrastructure and sustainability.

As AI expands faster than power systems that support it, it is actually time to rethink traditional data centers and figure out new way on how the cloud should grow without draining energy, water, and land.

According to the International Energy Agency, electricity use by data centers could more than double by 2030. This warning helps pushing cloud providers to adjust the basis of cloud space infrastructure.

A Look into Space Cloud Computing

Cloud computing is reaching a critical point. Simone Larsson, head of enterprise AI at Lenovo told CNBC that there’s going to be a “tipping point” where the designs of data centers will stop fitting the purpose.

Therefore, companies are restructuring how computing works. Amazon Web Services, Google Cloud, and Microsoft Azure are building custom processors that reduce energy use while supporting AI growth, enabling early forms of space cloud computing to be presumed seriously.

Amazon Web Services (AWS) has its Arm-based Graviton chips offer up to 40% better price to performance and use 60% less energy. Google’s Axion processors now run Gmail and Workspace, while Microsoft’s Cobalt 100 powers Teams and Azure SQL, keep platforms responsive as demand grows.

These gains matter as cloud providers discover data centers in orbit, where constant solar energy could remove pressure from crowded power grids on Earth.

On the other hand, Lenovo has brainstormed with architects’ underground facilities, modular data villages, and systems that reuse waste heat. The followings support future cloud space infrastructure without widening the physical footprint of the cloud.

″We interact with [data centers] every day, with our computers and with our phones. But this gentle giant, in the background, is putting massive pressure on water and our resources,” said James Cheung, partner at Mamou-Mani.

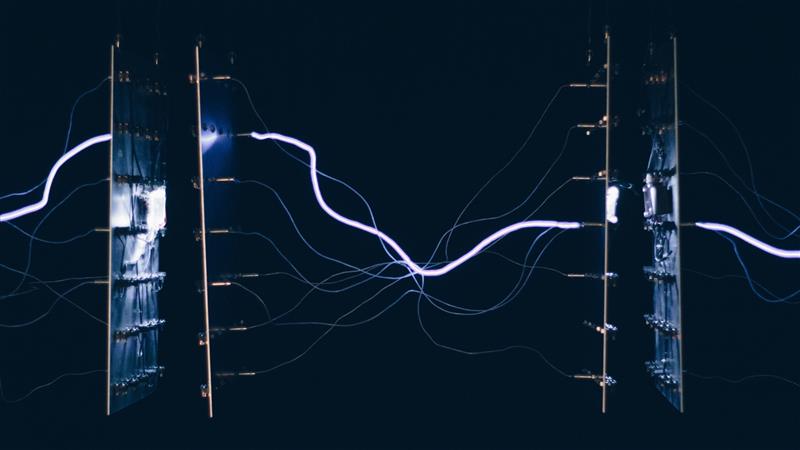

Orbit Data Center

Servers in space are now being tested by a group of companies to unlock new energy sources and scale AI beyond land-based limits. Additionally, projects supported by Google, Alibaba, and Nvidia startups are trying out data centers in orbit powered by the sun.

These are also designed to operate as part of the incoming low Earth orbit (LEO) infrastructure.

For example, European researchers are now studying space wireless power transmission as a way to move energy from orbiting systems back to Earth based networks. Since 2020, around $76 million (70 million euros) has flowed into research tied to the orbital data center concept, according to the European Space Policy Institute.

However, challenges always remain.

“Radiation-hardened hardware, cooling in the vacuum of space, and the extremely high cost of launching large, power-dense compute systems into orbit are major hurdles,” said S&P Global’s Liu.

Yet, companies are still testing chips and systems designed for space servers, betting that launch costs will fall.

“If you asked me now, this is unrealistic in the near-term,” said Jermaine Gutierrez, research fellow at ESPI. “In the long run, however, the question is whether terrestrial developments and continued cost savings thereof, outpace the cost savings from deployment in space.”

For the meantime, servers in space remain tentative. But combined with more efficient chips and new designs on Earth, they signal a deeper shift.

Therefore, with fast growing AI demand, the cloud may soon rely on an orbit data center, cloud servers in space, and other off-world systems to keep scaling. In the future, servers in space may no longer sound like science fiction, but like the next chapter of how the internet is built.

Inside Telecom provides you with an extensive list of content covering all aspects of the tech industry. Keep an eye on our Tech sections to stay informed and up-to-date with our daily articles.