New reports suggest that AI in hospitals, medical devices, and consumer apps produce errors, misdiagnoses, and even injuries as intelligent technologies are transforming healthcare into digital health.

Even if AI promises faster diagnoses and smarter decision-making, evidence shows the technology can also mislead doctors and patients alike. Digital health technology continues to be a critical tool for hospitals navigating this new landscape.

From surgical navigation systems that misplace instruments to apps giving dangerous medical advice, the rush to deploy AI may be outpacing safeguards designed to protect human lives. Digital health tools are increasingly part of this debate as hospitals adopt more AI-based solutions.

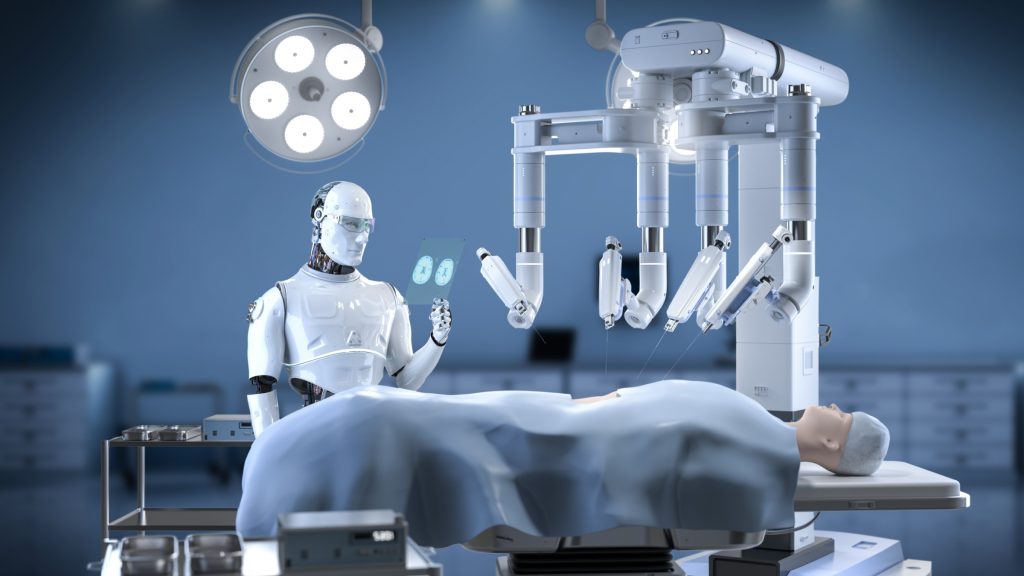

AI-Guided Surgery Goes Wrong

According to Reuters, the TruDi Navigation System, designed to help ear, nose and throat (ENT) surgeons treat chronic sinusitis.

Before integrating AI, the device had seven unconfirmed malfunctions over three years. Since the AI upgrade, the Food and Drug Administration (FDA) has received more than 100 reports of errors and at least 10 cases of serious patient injury. Surgeons have allegedly punctured skull bases, caused cerebrospinal fluid leaks, or triggered strokes after following faulty AI guidance. This shows the importance of human oversight via Healthtech when integrating AI into clinical practice.

Two patients who suffered strokes have filed lawsuits arguing that “the product was arguably safer before integrating changes in the software to incorporate artificial intelligence than after the software modifications were implemented.”

Researchers note that AI-based medical devices have a 43% recall rate within a year – about twice that of non-AI devices indicating that commercialization may be outpacing rigorous testing. Some hospitals are now exploring digital health technology for safer surgical workflows.

Even AI systems meant to provide diagnostic support, like Sonio Detect for fetal imaging, have reportedly mislabeled body parts, while Google’s medical AI has produced “hallucinations” of body structures, demonstrating that errors can occur even in less risky contexts. EHealth programs are being tested to monitor AI outputs more effectively.

Consumer Apps and False Assurance

Outside hospitals, AI digital health apps are proliferating, often without regulatory approval, such as “Eureka Health: AI Doctor” and “AI Dermatologist,” promising diagnostic and treatment guidance, sometimes claiming near-perfect accuracy.

Users have reported dangerously misleading outputs, such as overestimating cancer risks or failing to recognize real conditions. Hospitals are starting to adopt robotic digital health accelerator programs to combine innovation with safety.

Dr. Cem Aksoy, a medical resident in Ankara, recounted a patient panicking over ChatGPT-generated cancer predictions that turned out to be false.

“When someone is distressed and unguided, an AI chatbot just drags them into this forest of knowledge without coherent context,” he said. Surveys of medical practitioners reveal that 40% rely on AI daily, yet 91.8% have encountered AI “hallucinations” and 84.7% believe they could affect patient health.

Many institutions are experimenting with digital health connect networks to improve AI oversight.

Experts warn that while digital health offers potential benefits in diagnostic pattern recognition, legal liability, regulatory standards, and human oversight via Healthtech must be clarified to prevent harm.

Some hospitals have adopted Healthtech solutions that integrate AI alongside traditional clinical reviews.

AI-assisted surgeries, including robot-assisted surgery and robotic surgery, show promise but highlight the challenges of relying on various techs. One of the techs to rely on is robots and surgery in complex procedures.

As Dr. Rachel Draelos, an AI health consultant, points out, “There’s no way that all of these apps actually have a dataset that covers all [conditions],” underscoring the risks of over-reliance on AI in medicine. Without robust regulation and human supervision, the AI revolution in healthcare may be less a leap forward and more cautionary tale of speed outpacing safety.

Digital health initiatives, combined with ongoing eHealth evaluation, can help ensure patient-centered care.

Inside Telecom provides you with an extensive list of content covering all aspects of the Tech industry. Keep an eye on our Medtech section to stay informed and updated with our daily articles.