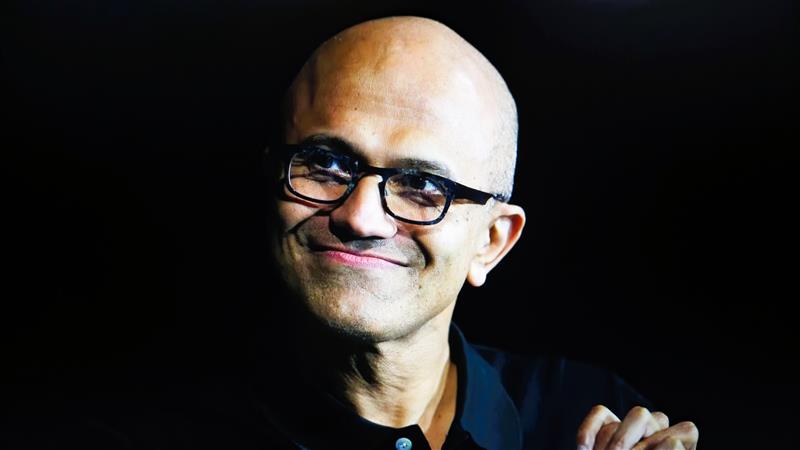

On Thursday, Microsoft CEO, Satya Nadella, confirmed that it will maintain its aggressive procurement of AI processors from Nvidia and AMD, as it begins deployment of its own custom designed silicon chips, Maia 200. Microsoft chips will supercharge Azure infrastructure and internal “Superintelligence” AI projects.

Microsoft Maia 200 deployment highlights the company’s strategy of balancing advanced innovation with strategic partnerships, all while expanding its cloud dominance and capitalizing on a booming AI-driven market.

CFO Amy Hood noted in an internal memo that investments in Microsoft AI chips, coding tools, and deals with OpenAI and Anthropic helped commercial bookings surge year over year.

Maia 200 Chips Will Be Microsoft’s Hail Mary

The Maia 200 chips are designed as an “AI inference powerhouse,” optimized for compute-heavy workloads like running advanced AI models in production. Big Tech claims the chip outperforms Amazon’s Trainium and Google’s latest TPUs, putting the company in direct competition with other cloud giants.

Mustafa Suleyman, former DeepMind co-founder and head of Microsoft’s Superintelligence team, celebrated the rollout on X.

x.com/mustafasuleyman/status/2015825111769841744

Nadella emphasized that even with Microsoft AI chips, the company will continue buying from Nvidia and AMD.

“Because we can vertically integrate doesn’t mean we just only vertically integrate,” he added.

The rollout also supports OpenAI’s models running on Azure AI infrastructure, reflecting Microsoft’s dual strategy of internal innovation alongside commercial partnerships. Hood’s memo highlighted how Azure and cloud services revenue grew 39%, slightly above expectations, with growing demand for AI integration across customer workloads.

Microsoft Chips Investment Drive Growth

Beyond Microsoft AI chips, Microsoft is aggressively expanding its AI ecosystem. Hood pointed to the launch of GitHub Copilot SDK and deals with OpenAI and Anthropic as key drivers of revenue and commercial growth.

Capital expenditure on Microsoft internal chip, GPUs, CPUs, and datacenter infrastructure hit $37.5 billion, a record for Microsoft, ensuring capacity for R&D and AI deployment.

“Customers are running larger, more complex workloads with us and increasingly integrating AI into the core of how they operate,” Hood wrote.

Azure now exceeds $50 billion in revenue, growing 26%, while Microsoft 365 commercial cloud and Dynamics 365 also saw double-digit growth, emphasizing how custom AI chips and software tools are reforming demand across Microsoft’s platforms.

If AI adoption accelerates, Microsoft is betting that owning both hardware and software, particularly Microsoft chips, will provide a long-term edge. Suleyman’s team will initiate leading models on Microsoft Maia 200, while commercial clients benefit from enhanced performance on Azure, a strategy blending vertical integration with collaborative AI partnerships.

Microsoft chips are essential to succeeding in this vision, driving innovation and solidifying Microsoft’s lead in the AI race.

Inside Telecom provides you with an extensive list of content covering all aspects of the tech industry. Keep an eye on our Tech sections to stay informed and up-to-date with our daily articles.