At the University of Massachusetts Amherst, a team of researchers has pioneered a significant advancement in robotic guide dogs, driven by firsthand insights from guide dog users and trainers.

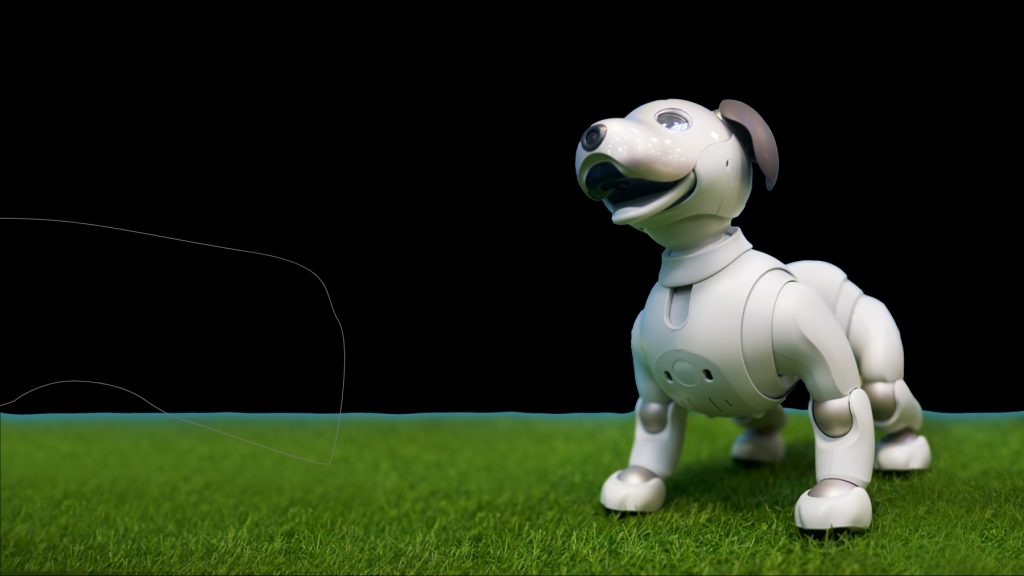

While traditional guide dogs offer autonomy and mobility for people with visual impairments, they lack accessibility due to the scarcity of trained dogs, high training costs, allergies, and other practical barriers.

Recognizing the potential for robots to fill this gap, the UMass Amherst team, led by Assistant Professor Donghyun Kim of the Manning College of Information and Computer Science, delved into the existing challenges and user expectations for guide dog alternatives.

“Our initial step wasn’t to jump straight into technology development but to first understand the user experience with animal guide dogs and what users are actually expecting from robotic alternatives,” explained Kim.

The team engaged in extensive semi structured interviews and observational studies with 23 visually impaired guide dog handlers and five trainers, employing thematic analysis to extract valuable data.

One critical insight was the nuanced requirement for a balance between robot autonomy and user control. Contrary to the initial assumption that a robotic guide dog might function like an autonomous vehicle, the research revealed that users prefer to direct the overall navigation while relying on the dog – or in this case, the robot – for local obstacle avoidance.

“This balance is delicate,” Kim noted, “users need to feel safe and in control, rather than being passively led by a fully autonomous system.”

The research highlighted specific technical specifications necessary for a robotic guide dog to be practically useful. These include a robust battery life capable of supporting lengthy commutes, sophisticated camera systems for navigating overhead obstacles, and audio sensors to detect hazards from obscured areas. The device must also understand contextual commands like ‘sidewalk,’ which implies following the path rather than moving in a straight line and assist users in tasks such as boarding the correct bus and locating a seat.

The comprehensive study not only sets a blueprint for developing deployable robotic guide dogs but also serves as a guiding document for other developers in the field. “We’ve provided directions on how to develop these robots so they can be effectively used in real-world scenarios,” said Hochul Hwang, a doctoral candidate in Kim’s robotics lab and the paper’s first author.

This award-winning research is not just a theoretical exercise but a foundation for future innovations that can enhance the independence of individuals with visual impairments. The team’s commitment to blending advanced robotics with human-centric design promises to propel this niche field forward, making autonomous guide dogs a viable and valuable aid for those who need them.

Reflecting on the broader impact of their work, Kim expressed hope that their findings would inspire a wide range of researchers in robotics and human-robot interaction.

Inside Telecom provides you with an extensive list of content covering all aspects of the tech industry. Keep an eye on our Intelligent Tech sections to stay informed and up-to-date with our daily articles.