On Tuesday, Meta announced the latest version of its open-source AI model, Llama 3.1, with three versions, the largest of Meta’s most powerful AI model yet.

The Llama 3.1 large language model (LLM), which is available for free, shows Meta’s efforts into its AI investment to catch up with many rivals, such as OpenAI, Anthropic, Google, and Amazon. The tech giant’s announcement noted a deep partnership with the largest chipmaker, Nvidia, who supplies the Graphics Processing Units (GPUs) required for the training of Meta’s AI models, including Llama 3.1.

Intelligent Partnerships

Compared to other AI leading companies like OpenAI, which intends to make profits from its models by selling them, Meta is not willing to launch its own enterprise business.

The Facebook parent is partnering with big tech companies, such as Amazon Web Services, Google Cloud, Microsoft Azure, Databricks, and Dell, to offer its latest Llama 3.1 through their cloud platforms.

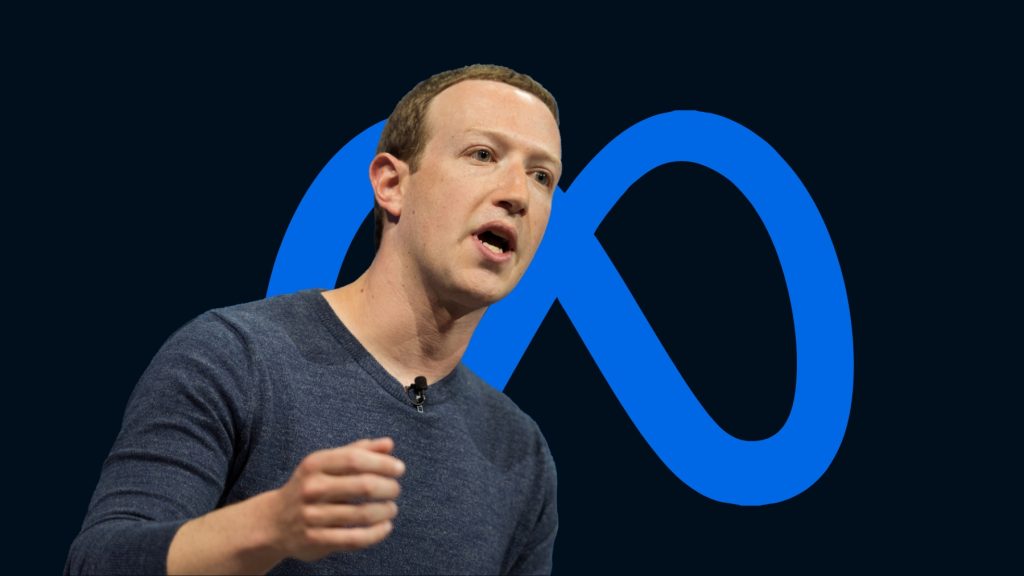

Meta’s CEO, Mark Zuckerberg, previously mentioned that “We’re building teams internally to enable as many developers and partners as possible to use Llama, and we’re actively building partnerships so that more companies in the ecosystem can offer unique functionality to their customers as well.” While a financial spokesperson highlighted that the financial benefits are minor. In addition, the company believes that by making such AI technologies available to all, it can appeal to top talent and reduce the computing costs.

As for Meta and Nvidia, the partnership can only be seen as a two-party beneficiary kind of deal. For the chipmaker, the adoption of open-source models can boost demand for its chips. For Meta, in parallel, who always looks forward to training the most powerful AI models, the latest, and most reliable GPUs are required.

The technology conglomerate is working hard to promote a community of developers building AI apps with its software.

In an interview with CNBC, Meta’s VP of AI partnerships, Ash Jhaveri, said the company’s strategy is valuable given that it gives access to developers to its internal tools, pushing them to build upon them. It also helps Meta to benefit from the improvements made by the developers.

Llama 3.1 Different Aspects

Large language models with substantial parameters can accomplish greater things compared to smaller LLMs, such as understanding the context in long streams of text or solving complex math equations, and even generating synthetic data that is presumably to be used in honing small AI models. That’s why the latest Llama 3.1, which has 405 billion parameters, is expected to be different from other AI models.

Meta is also releasing smaller versions of Llama 3.1 that Meta has called Llama 3.1 8B and Llama 3.1 70B models. Basically, they are improved versions of their previous versions, and according to the company, they can be used to power chatbots and software coding assistants.

Final Thoughts

Adopting an open-source AI model is a good point for Meta’s powerful AI, as it makes it easier for people to have access to such technologies and benefit from them without incurring costs when they can’t afford to. But privacy is still a challenge that Meta is required to resolve, because, as an open-source model, these models will surely have access to huge amounts of data, which might be somehow sensitive and personal.

Inside Telecom provides you with an extensive list of content covering all aspects of the tech industry. Keep an eye on our Intelligent Tech sections to stay informed and up-to-date with our daily articles.