Big Tech’s Safeguards and Security Failed the Children

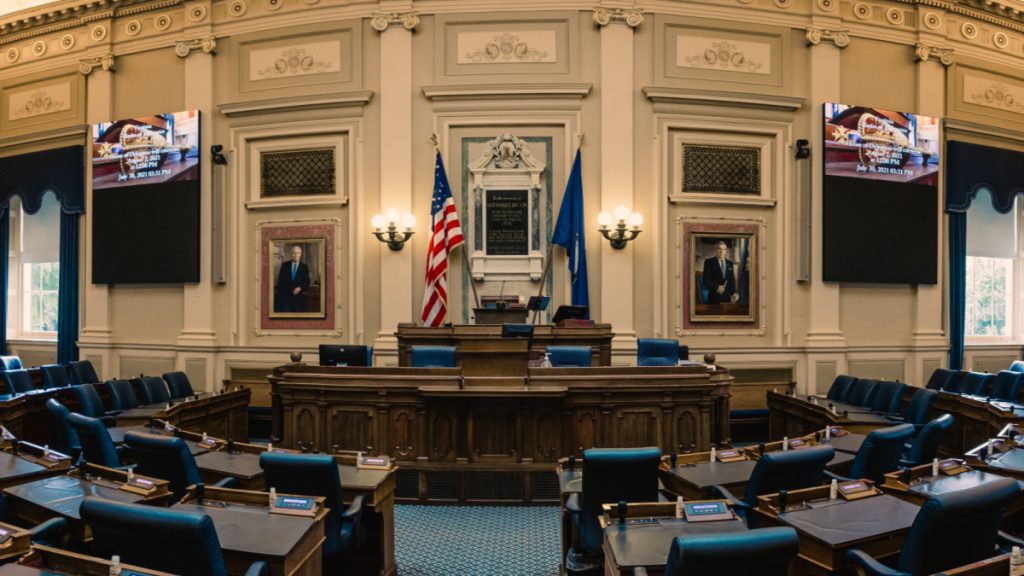

As concerns about child safety online escalate, five prominent tech leaders are set to testify before Congress today over their failure to safeguard children’s safety and security.

- Lawmakers are unhappy with their inadequacies in protecting children from explicit content and potential predators.

- Despite the recent efforts, the public is still dissatisfied with the measures.

Five tech leaders will testify before Congress later today regarding their respective companies’ efforts in the online child sexual exploitation crisis.

Lawmakers have long criticized major tech companies over what they perceive as insufficient safeguards and security measures for children from explicit content and potential predators.

The hearing comes after debates surrounding stricter regulations, with legislators demanding explanations. As such, the executives will have to address allegations that big tech companies have not done enough to safeguard children from online threats, particularly sexual exploitation.

In November 2023, following a whistleblower’s testimony, Congress had to subpoena three tech CEOs to testify at the hearing after their “repeated refusals” to cooperate:

- Evan Spiegel, Co-Founder and CEO of Snap Inc.

- Jason Citron, CEO of Discord Inc.

- Linda Yaccarino, CEO of X Corp.

Mark Zuckerberg, Founder and CEO of Meta, and Shou Chew, CEO of TikTok Inc., however, volunteered their testimonies. At the time, Senators Dick Durbin and U.S. Lindsey Graham stated, “We’ve known from the beginning that our efforts to protect children online would be met with hesitation from Big Tech. They finally are being forced to acknowledge their failures when it comes to protecting kids.”

While this hearing is Zuckerberg’s 8th time testifying and Chew’s second, it is the first time for the rest of the tech CEOs. This high-profile hearing will be focused on children’s safety online, with increasing concerns about mental health and protection from sexual exploitation.

All these tech companies have failed in one way or another to adequately keep children safe on their platforms. Despite these companies’ recent efforts, the public is not satisfied, as they deem it not the companies’ best.

- Discord Trust and Safety changed its child safety policies.

- Meta provided new guidance and tools to help moderation, among other changes.

- X shared its zero-tolerance strategy.

- Snapchat now allows parents to block their kids from using the platform’s AI.

- TikTok, for its part, also took measures of their own.

The hearing will take a magnifying glass to all these efforts and more. The questions will bring to the forefront all their shortcomings, from algorithms promoting illicit content to potential risks of exploitation.

Following this hearing, the Senate hopes to be able to advance a plethora of bills targeting the online safety of children. A prime example is the Kids Online Safety Act (KOSA), which would force platforms to take reasonable measures to prevent harm to minors. Snapchat has recently gone against the grain and announced its support for the bill.

The time has come for these tech CEOs to answer for their decisions.

Inside Telecom provides you with an extensive list of content covering all aspects of the tech industry. Keep an eye on our Tech sections to stay informed and up-to-date with our daily articles.