Google is coming into the AI-image generator space, and its coming in storm. Google is releasing its text-to-image AI algorithms with utmost caution.

Google hasn’t made the system accessible to the general public, despite the fact that the company’s Imagen model generates results comparable – if not surpassing – in quality to OpenAI’s DALL-E 2 or Stability AI’s Stable Diffusion.

Here is why you should be overly excited about Google’s, and why it is truly incredible. But first a brief overview.

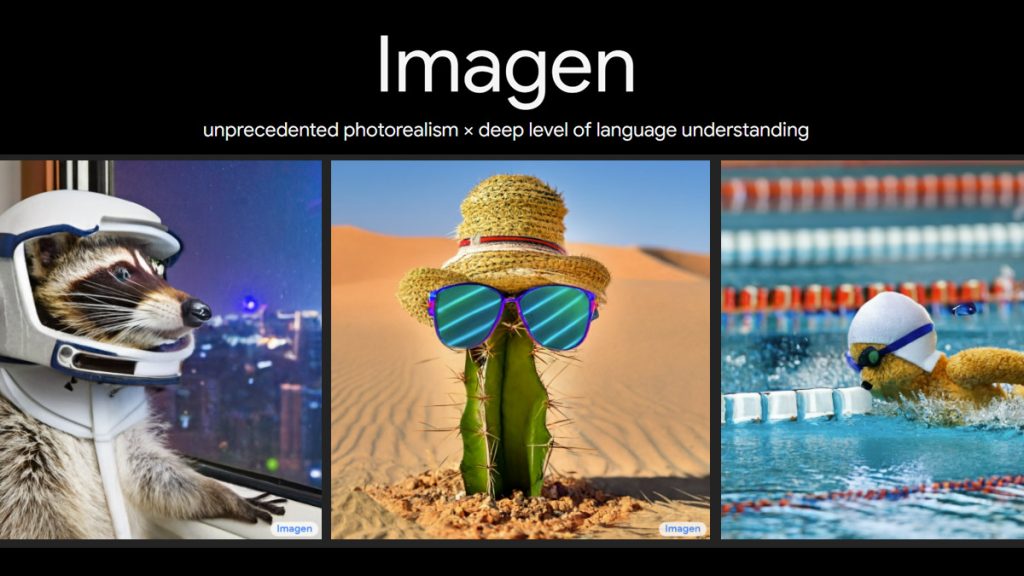

Introduction and Summary of Google’s Imagen

Imagen is an AI program that generates photorealistic visuals from text input. The text is encoded into embeddings by Imagen AI using a big frozen T5-XXL encoder. The text embedding is mapped onto a 64 by 64 picture using a conditional diffusion model.

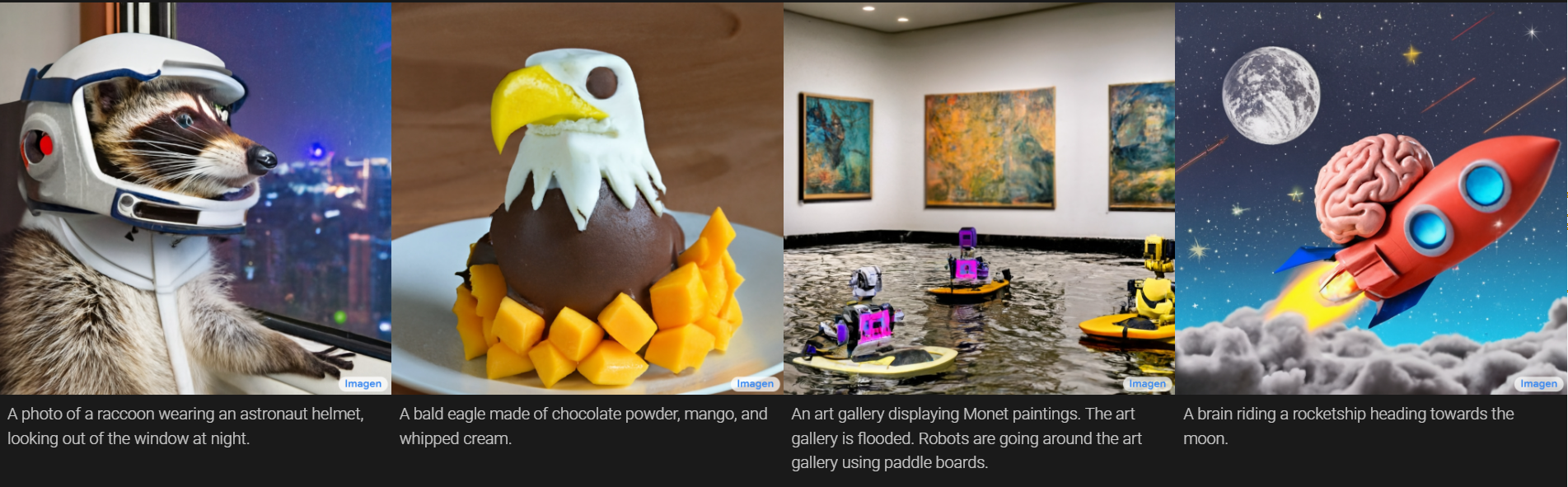

The results are pretty insane to say the least. Looking at the below examples we can safely say that google has

In their research paper, Google presents their text-to-image diffusion model with a profound comprehension of language and an exceptional level of photorealism. Imagen AI relies on the strength of diffusion models for high-fidelity image synthesis and builds on the effectiveness of big transformer language models for text comprehension.

In their research, the main finding is that generic large language models, which are trained on text-only corpora, are surprisingly effective at encoding text for image synthesis: in Imagen, expanding the language model’s size significantly improves sample fidelity and image-text alignment compared to expanding the image diffusion model’s size.

In layman’s terms, its very advanced. Here are a few samples found on Imagen’s website to illustrate the above.

Now in the example below found on Imagen’s website, notice something amazing. This AI can spell words perfectly. This is a major step ahead of all other AI image generators, who only

How Imagen AI Works

It is difficult to describe how impressive this AI actually is. They create wholly original visuals that aesthetically represent the semantic information in the caption. How does Imagen AI achieve this?

A text encoder receives the caption as its first input. This encoder transforms the caption’s textual information into a numerical representation that captures the text’s semantic content.

Next, an image-generation model gradually transforms noise, or “TV static,” into an output image in order to produce the image. The text encoding is sent to the picture-generation model as input to direct this process. This has the effect of informing the model what is in the caption so it can generate the appropriate image. A little graphic that visually represents the caption we input into the text encoder serves as the output. All other image generators including Imagen AI work this way.

After that, a super-resolution model is used to enlarge the small image to a larger resolution. In order to determine how to behave while “filling in the gaps” of missing information that inevitably result from quadrupling the size of our image, this model also accepts the text encoding as input. What we want appears as a medium-sized image as a result.

Then after that, this medium-sized image is sent to yet another super-resolution model, which works almost just like the first one but expands our medium-sized image to a high-resolution image this time. The resulting image, 1024 x 1024 pixel – suitable in quality for many online mediums – graphically conveys the meaning of our caption.

How do Language Models Work?

All text-to-image generators like Imagen AI use language models to generate their images. A machine learning model called a language model is created to represent the language domain. It can serve as the foundation for a variety of tasks involving language.

Linguistic intuition is what language models aim to simulate. That is not a simple task. As we’ve already stated, linguistic intuition is developed through constant exposure to a language, not through formal education.

Large neural networks are what keep NLP at its current state-of-the-art level. By analyzing millions of data points, neural language models like BERT develop something like to linguistic intuition. This procedure is referred to as “training” in machine learning.

We must devise tasks that force a model to learn a representation of a specific domain in order to train it. Similar to our prior example, filling in the blanks in sentences is a typical task for language modeling. A language model learns to encode the meanings of words and longer text passages through this and other training activities.

What is Diffusion?

Machine learning algorithms that can create new data from training data are referred to as generative models. Other generative models include flow-based models, variational autoencoders, and generative adversarial networks (GANs). Each can generate images of excellent quality, but they all have drawbacks that make them less effective than diffusion models, which is what Imagen AI does.

Diffusion models learn to recover the data by reversing this noise-adding process after first damaging the training data by adding noise. To put it another way, diffusion models are able to create coherent images out of noise.

Diffusion models learn by introducing noise to images, which the model later masters the removal of. In order to produce realistic visuals, the model then applies this denoising technique to random seeds.

By conditioning the image production process, these models can be utilized to generate a virtually unlimited number of images from text alone. Strong text-to-image capabilities can be provided by the seeds with the help of input from embeddings like CLIP. Inpainting, outpainting, bit diffusion, image creation, and image denoising, and image enhancement are just a few of the jobs that diffusion models can do.

Popular diffusion models, besides Google’s Imagen AI, include Open AI’s Dall-E 2 and Stability AI’s Stable Diffusion.

Imagen AI Research Highlights

- Demonstrating the superior performance of big pretrained frozen text encoders for the text-to-image job.

- We demonstrate that scaling the size of the pretrained text encoder is more crucial than scaling the size of the diffusion model.

- The employment of very large classifier-free guide weights is made possible by the introduction of a new thresholding diffusion sampler.

- Introducing a new Efficient U-Net design that converges more quickly and is more memory and compute efficient.

- On COCO, we achieve a new state-of-the-art COCO FID of 7.27; and human raters find Imagen samples to be on-par with reference images in terms of image-text alignment.

Closing Thoughts

The results of Imagen AI speak for themselves and represent yet another major breakthrough in the fields of text-to-image generation and generative modeling in general. Imagen adds to the list of Diffusion Models’ outstanding accomplishments, which have swept the machine learning community off their feet over the past few years with a succession of ridiculously spectacular outcomes.